27 February 2024

AUTHOR: Megan Andrews

Artificial Intelligence (AI) is on the cusp of reshaping society, bringing unprecedented opportunities and potentially helping to overcome some of humanity’s greatest challenges. But are we embracing AI with minds that are too trusting – and turning a blind eye to the negative consequences?

There are many questions around the ethics of AI. Are workforce impacts being appropriately considered? Are the outputs reliable? Is it reasonable, in the interests of progress and market economies, to let technology power ahead – and watch the chips fall as they may?

Some of the University of South Australia’s AI experts join the debate and share their views on the most pressing concerns.

Taking jobs from the future

Associate Professor Sukhbir Sandhu leads UniSA’s Centre for Workplace Excellence. She says AI’s influence on workplaces will clearly grow, but there will be plenty of positive impacts to mitigate the negative.

“Yes, we will see more job displacement where repetitive jobs are increasingly replaced by AI, however we’ll also see growing job transformation, where AI helps workers do their jobs better – such as assisted surgeries or radiology,” she says. “And we’ll see new job creation, such as coders and trainers to advance our use of AI.”

Assoc Prof Sandhu says it’s possible to ensure this occurs alongside an intimate understanding of ethics.

“Corporations are, whether we like it or not, the most powerful actors in our society with a disproportionate influence on our economic systems.

“As a society, we need to work with them to ensure the balance is towards, first, a deep understanding of what ethics and AI mean, and secondly, ensuring that our move towards AI is not stripped of its ethical underpinnings.”

She’s hesitant to recommend government regulation as the primary answer, although global momentum is building.

In December 2023, the European Union reached agreement on landmark legislation to regulate AI. Europe’s pending AI Act is a global first. If passed, it would ensure tech companies disclose data used to train AI systems and carry out product testing, especially when used in high-risk applications such as self-driving vehicles and healthcare.

The EU law is the most comprehensive effort to regulate AI. In the US, a presidential order was issued in October 2023 that addressed AI’s impact on national security and discrimination. In China, regulations require AI to reflect socialist values. Countries such as the UK and Japan have taken a hands-off approach.

Australia has a patchwork of legislative pieces for specific settings and is working towards next steps, with the Australian Government’s interim response to the Safe and Responsible AI in Australia Consultation announcing a balanced approach to address risk while supporting innovation. This includes legislating high-risk AI applications, establishing an expert advisory group, and working with industry to develop voluntary standards.

“Government regulation is important, but it’s often too little, too late,” Assoc Prof Sandhu says.

“On global issues such as AI, it requires international governments to come together. Not only is this extremely challenging, but it can also dampen the ability of organisations to respond quickly to opportunities.”

Instead, she suggests self-regulation will come to the fore, just as industry groups have come together to address themes such as environment and community.

This is underway, with Google, Microsoft, Anthropic and OpenAI committing last year to establish a cooperative body that will oversee the safe development of AI.

Assoc Prof Sandhu says self-regulatory mechanisms are valuable when done well, but can create challenges, such as barriers to entry.

“They can also lead to behaviours like ‘greenwashing’ in the environmental space, enabling businesses to leverage instruments to falsely promote they are doing the right thing.

“At this early stage, however, I think self-regulation may be our best bet to put boundaries in place around the directions AI will take.”

Associate Professor Wolfgang Mayer is based at UniSA’s Industrial AI Research Centre. His research uses a ‘best of both worlds’ approach – combining the power of AI with ‘traditional’ methods to solve problems.

He is not concerned with AI taking over jobs, though he concedes machines will replace some roles.

“In most cases, however, it will be that people using AI will replace people that do not.

“It is more likely people will just do different things, as with previous industrial revolutions. People don’t simply lose their jobs, they do different kinds of work and hopefully it will be more meaningful and value-adding.”

Assoc Prof Mayer is working on a project with UniSA’s Rosemary Bryant AO Research Centre, using AI to analyse de-identified data across different clinical systems to predict risk factors in hospitals, such as inpatient falls.

“In applications such as this, having the toolbox is only one part of the equation. Translating the AI findings into the workplace, opens up a whole new set of opportunities.”

Similarly, while AI is purported to make programmers immensely more productive, it necessitates additional work to ensure data integrity and security.

Assoc Prof Mayer uses an example of work his team is undertaking to speed up the coding of simulation models that will help defence users make decisions.

“How are we guaranteed that the code these generators spit out is 100% correct and free of security problems? It can’t be assumed.”

And this extends to applications that do not use AI themselves, but may have been developed using AI assistance. Assoc Prof Mayer says the responsibility for code integrity lies with its programmer, but there will be times when less skilled, or time-poor coders, may not check AI generators in enough detail.

“Security aspects of software are notoriously difficult to handle, the code may look fine, but it could incorporate a problem, such as a security risk, that remains undetected.”

Bias in outputs

Assoc Prof Sandhu agrees that despite the coding efficiencies AI enables, it brings new demands.

“AI is only as good as its algorithms, so coders need an innate understanding of equity, and fairness bias,” she says.

“There is certainly the risk of AI unintentionally amplifying societal discrimination and racism, and we know this has happened in the past with recruitment tools.”

Professor Lin Liu, also with UniSA’s Industrial AI Research Centre, says a key issue is machine learning’s reliance on historical data, without looking deeper.

“For example, gender, age or postcode may be picked up as predictors of student success, but any model developed on this data would be discriminatory if applied in the workplace,” she says.

Prof Liu has supervised several PhD candidates investigating this area, including Oscar Blessed Deho, who recently completed his studies in algorithmic fairness in learning models.

While research shows that demographic group imbalance in the datasets used for training predictive models can be a source of algorithmic bias, Oscar's research reveals that even algorithms designed to ensure demographic balance do not necessarily produce fair AI models.

For example, several models have sought to predict student success in higher education. These tend to discriminate against minority groups such as international students – as the models learn from historical generalisations based on demographic groups.

“There are other features of students that affect their outcomes, not just demographic attributes,” Oscar says.

“Variables including high school score, for example, or how much effort a student puts put into their studies, are also relevant.

“If we solely focus on balancing demographic distribution and not addressing those other features, a model might still make discriminatory decisions.”

His team tested a new approach that accounted for relevant variables, and found it significantly improved model fairness.

Oscar says by dissociating the relationship between demographic attributes and the outcome being predicted, a model cannot tell whether a person is a citizen, so it focuses on the relevant features.

He says while this approach is not overly complex, people need to know to adopt it.

“It doesn’t help that there are different metrics for measuring fairness,” Oscar says. “There are currently about 26 different metrics, and the number keeps increasing. It can be challenging to know what metrics to use for specific applications.”

He is now focusing on longevity in AI models – even when an AI model is trained to be fair, will it continue to be next year?

“The real world is very dynamic, distribution of data changes, and that can impact fairness”.

Tipping the scales to societal good

Prof Liu says there are many risks associated with AI.

“People with malicious intent will always look for opportunities,” she says.

“For example, courses that teach students to make our networks safe could also breed new waves of hackers.

“And machine learning models are highly vulnerable to human interference. If someone wants to cheat a model, it can be done - researchers have shown how easy it is to trick autonomous cars by putting simple stickers over road signs.

“However, despite its potential to be detrimental to society, there is potential for enormous progress.”

She points to environmental sustainability.

“AI has the potential to multiply energy efficiency exponentially, through improvements to monitoring, conservation and advancing renewable energy,” Prof Liu says.

“AI can assist us in ways that humans cannot, or cannot as quickly and accurately, such as the intelligent use of drones for conservation, or precision agriculture.”

Associate Professor Belinda Chiera, Deputy Director of the Industrial AI Research Centre, agrees.

A classically trained musician, Assoc Prof Chiera gained a PhD in maths before shifting her attention to AI. She is particularly interested in how it can help preserve arts and culture.

“When you have such a powerful tool, you need to take on the responsibility to understand how to use it ethically,” Assoc Prof Chiera says.

She says there are risks in placing too much trust in the outputs by not questioning the inputs, but these can be managed.

One of her current projects involves using AI to preserve indigenous languages.

“We're working with colleagues in New Caledonia to develop AI tools to preserve one of their endangered indigenous languages. As a remote language, a lot of it isn’t written, so there are complexities in translating and recording imagery and song.

“The AI programming will create resources where, for example, you can hover over the words and the translation will come through as sound.”

She says ensuring accuracy is integral to the process, and an important step is having the AI-generated texts assessed by language experts.

Assoc Prof Chiera believes AI discussions should be integrated into all areas of study, since most workers of the future will need some understanding of the opportunities and limitations. This approach is encouraged throughout UniSA.

“In the business courses I teach, my focus is ensuring students understand how to bring AI into the business world and how it can be used to augment and enhance human ability. It’s also critical that they understand its limits.

“By prioritising fairness, inclusivity and responsible innovation, we can all navigate the transformative power of AI to make sure it benefits society as a whole.”

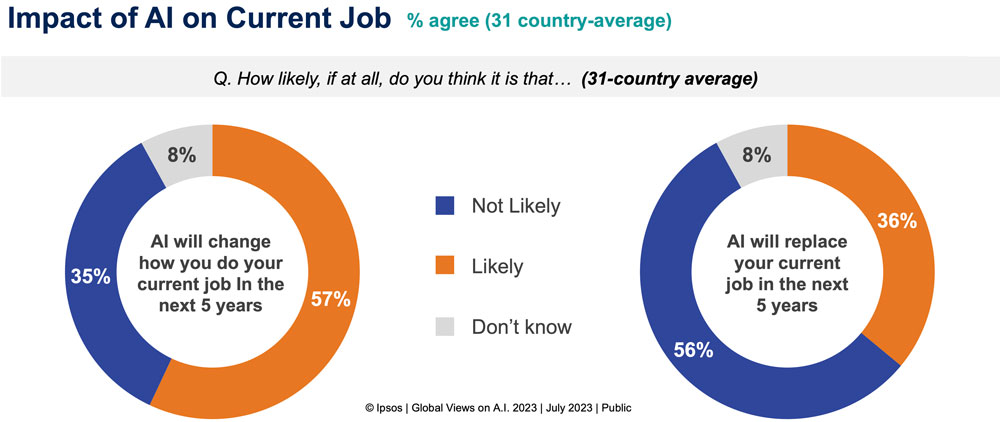

Global Views On AI

How people across the world feel about artificial intelligence and expect it will impact their life

Base: 22,816 adults under the age of 75 across 31 countries, interviewed May 26 – June 9, 2023 -- online only in all countries except India.

The “Global Country Average” reflects the average result for all the countries where the survey was conducted. It has not been adjusted to the population size

of each country or market and is not intended to suggest a total result. The samples in Brazil, Chile, Colombia, India, Indonesia, Ireland, Malaysia, Mexico, Peru, Romania, Singapore, South Africa, Thailand, and Turkey are more urban, more educated, and/or more affluent than the general population.

© Ipsos | Global Views on A.I. 2023 | July 2023 | Public

You can republish this article for free, online or in print, under a Creative Commons licence, provided you follow our guidelines.